Taking machine learning further

We’ve all heard the saying “practice makes perfect”. While this is normally a piece of advice shared with someone learning a new skill, it applies to machine learning as well.

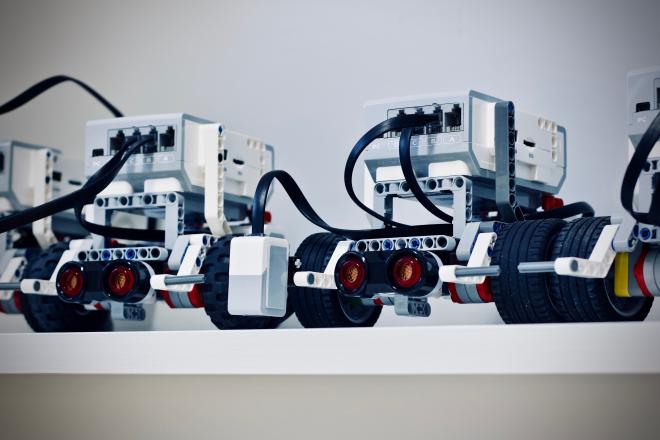

When a robot uses a trial-and-error process to navigate a building layout with zero collisions, that is practice in action. Known as reinforcement learning, this subfield of artificial intelligence (AI) is at the heart of Yiming Peng’s work with the Evolutionary Computation Research Group at Victoria University of Wellington.

Yiming has been using NeSI computing resources to develop new algorithms that will make it easier for computers to solve complex reinforcement learning problems. His recent PhD project explored how to improve the efficiency of existing algorithms and how to design better representations of critical reinforcement learning components.

“We have developed several new reinforcement learning algorithms that have achieved superior and competitive performance in comparison to several state-of-the-art algorithms reported in the literature,” he says.

Access to computational resources can be a critical factor for research success in the AI domain, he notes. For example, the problems he was investigating for his PhD project relied on his ability to run large-scale computational experiments.

“I couldn't imagine how long my PhD journey would go if I hadn't used the high performance computing resources of NeSI,” he says.

The results of his work already show promise for real-world applications. Yiming has been collaborating on a biology project to help several ecologists identify animals' activities from images captured by trap cameras in Wellington.

“In the project, I have been mainly adopting image recognition techniques, which had given reasonably good results,” says Yiming. “Some recent findings suggest that reinforcement learning techniques can be utilised to facilitate image recognition tasks. So, it is worthy to provide an attempt of getting my algorithms involved in the project.”

With his PhD now complete, Yiming is currently looking for an AI-related job in industry, but says he also has other research project ideas he’d like to pursue.

“One of the projects I am going to start is to use reinforcement learning to facilitate supervised learning tasks such as classification, which undoubtedly will involve large computations,” he says. “Thus, I am pretty sure that I will continue to use NeSI.”

Yiming’s recent publications, for which he used NeSI resources, include:

Y. Peng, G. Chen, M. Zhang, and S. Pang. “Generalized Compatible Function Approximation for Policy Gradient Search,” in The 23rd International Conference on Neural Information Processing (ICONIP 2016), 2016.

Y. Peng, G. Chen, S. Holdaway, Y. Mei, and M. Zhang. “Automated State Feature Learning for Actor-Critic Reinforcement Learning through NEAT,” in The Genetic and Evolutionary Computation Conference (GECCO Companion 2017), 2017.

Y. Peng, G. Chen, M. Zhang, and Y. Mei. “Effective Policy Gradient Search for Reinforcement Learning Through NEAT Based Feature Extraction,” in Simulated Evolution and Learning - 11th International Conference (SEAL 2017), 2017.

W. Hardwick-Smith, Y. Peng, G. Chen, Y. Mei and M. Zhang. “Evolving Transferable Artificial Neural Networks for Gameplay Tasks via NEAT with Phased Searching,” in Australasian Conference on Artificial Intelligence 2017 (AI 2017), 2017.

Y. Peng., G. Chen., M. Zhang, and S. Pang. “A Sandpile Model for Reliable Actor-Critic Reinforcement Learning,” 2017 International Joint Conference on Neural Networks (IJCNN 2017), 2017.

G.Chen., Y.Peng., and M.Zhang.“Constrained Expectation-Maximization Methods for Effective Reinforcement Learning,” 2018 International Joint Conference on Neural Networks (IJCNN 2018), 2018.

G. Chen., Y. Peng., and M. Zhang. “An Adaptive Clipping Approach for Proximal Policy Optimization,” arXiv:1804.06461, 2018.

---------------------

Do you have an example of how NeSI platforms have supported your work? We’re always looking for projects to feature as a case study. Get in touch by emailing support@nesi.org.nz.