Scouring continuous seismic data for Low-Frequency Earthquakes

PhD Candidate Calum Chamberlain, at Victoria University of Wellington’s School of Geography, Environment and Earth Sciences, has used NeSI’s Pan cluster in an attempt to further our ability to understand the properties of earthquakes and faults, which is often limited by what earthquakes can be detected.

Large earthquakes, while easy to detect, occur infrequently, whereas small earthquakes occur frequently, but are hard to detect due to low signal amplitudes. The detection and analysis of small earthquakes is particularly important when considering faults that are likely to generate large earthquakes, but have not generated such an earthquake within recorded history.

New Zealand's Alpine Fault is thought to be capable of generating large (magnitude 7-8) earthquakes around every 330 years, with the last large earthquake thought to have occurred in 1717 AD. In this context, understanding how small earthquakes are distributed along the fault in both space and time may help us to understand how the next Alpine Fault earthquake will behave.

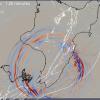

To enable the detection of small earthquakes associated with the Alpine Fault, Chamberlain and his colleagues recorded background seismic waves for the last 61/2 years on a network of highly sensitive seismometers deployed in the high Southern Alps in shallow boreholes and on the surface. To take advantage of this large dataset (~1.5 TB), they adapted a cross-correlation detection method in which previously detected earthquakes were used as templates to scan through the data and find similar events (video).

This method has specific benefits for earthquakes that repeat, which, as Chamberlain explains, “is interpreted to mean that slip (the relative displacement of two formerly adjacent points on the opposite sides of a fault) is recurring on the same patch of a fault multiple times.” In particular, it is better at detecting the seismic signatures of recently recognised “slow slip” earthquakes (commonly known as “silent earthquakes”) than standard detection methods. Slow-slip events have been documented on many major faults throughout the world using observations of continuous global positioning system (GPS) networks and are thought to transfer stress from the deep extent of these faults to the shallow earthquake-generating areas in the cooler, more brittle crust.

Due to the Alpine Fault's geometry and sparse network coverage, GPS cannot currently be used to detect slow-slip events. However, these slow-slip events often generate low-frequency seismic energy composed of lots of small low-frequency earthquakes (LFEs). Therefore, these LFEs can be used as proxies indicative of slow slip. The research team had previously generated a preliminary catalogue of LFEs beneath the Southern Alps, and showed that they occurred near the inferred deep extent of the Alpine Fault. By looking at changes in LFE detection rate with time, they found that the deep extent of the Alpine Fault is sensitive to stresses caused by large regional earthquakes.

“Our previous study was limited by our ability to generate templates to use in the cross-correlation method,” says Chamberlain, “so to improve on this preliminary catalogue we had to include more LFE sources as templates to target. To allow the use of much greater numbers of LFE templates, we developed a multi-parallel workflow in Python. Applying this workflow to many thousands of LFE templates, however, required more computational power than we originally had access to.”

However, for the second study, NeSI provided the researchers with access to the Pan cluster HPC platform which allowed them to take advantage of the multi-parallel workflow. “This resulted in greatly reduced computational times — from several months on a standard four-core machine to less than ten hours on Pan for the full 61/2 year-long dataset”, Chamberlain explains. “Setting up our codes to run was straightforward and data transfer using Globus was quick thanks to the assistance and expertise of the NeSI team. We were able to transfer our 1.5 Tb dataset to Pan in less than 24 hours, allowing us to quickly migrate to the cluster.”

“The speed-up provided by Pan has allowed us to experiment with new objective methods of constructing templates and detecting small earthquakes in the continuous data. This speed-up also enabled us to undertake much more experimentation, allowing us to test and develop our methods dramatically faster than we would otherwise have been able to. As a PhD student close to completion, the speed-up afforded by the NeSI infrastructure and the ease of migration came at a crucial time. Everything from the original application procedure to complex job management was straightforward and well-documented, and the expertise of the technical team was invaluable.”

This research was funded by the Marsden Fund of the Royal Society of New Zealand, the Earthquake Commission, and Victoria University of Wellington.