How multithreading and vectorisation can speed up seismic simulations by 40%

The Challenge:

Develop a dynamical model of crustal deformation that can reproduce the spectrum of fault slip behaviour at the Hikurangi subduction zone.The Solution:

Consulting with NeSI’s Computational Science Team to build and run the numerical tools required to simulate seismic wave propagation.The Outcome:

Ultimately, this model will help New Zealanders better prepare for future massive earthquake events.

Remember the 9.1-magntitude, Tohoku-oki earthquake, which shook Japan in 2011? This event triggered a powerful tsunami with a wave reaching 40m and penetrating up to 10km inland. It was the fourth most powerful earthquake recorded in the world since 1900.

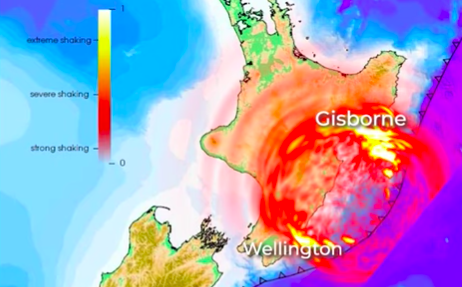

In New Zealand, we have all the conditions in place to produce a similar mega-thrust earthquake. Geodetic monitoring has revealed that a large portion of the Hikurangi subduction zone beneath the North Island of New Zealand, where the oceanic Hikurangi Plateau is slipping beneath the continental crust of the Indo-Australian Plate, is currently locked.

This implies that stress is building up. The degree of locking, however, appears to be highly variable with complex patterns of seismic and aseismic (earthquake-free) activity along the fault line. Similar patterns of mega-thrust slip activity have been observed at many other Pacific-Rim subduction zones; yet, the underlying mechanisms controlling the variability in fault slip behaviour at Hikurangi, as well as at other subduction zones, are poorly understood.

GNS Scientist Dr Yoshihiro Kaneko and Victoria University of Wellington PhD student Bryant Chow are applying state-of-the-art seismological techniques and numerical modelling, combined with existing and new seismic datasets, to shed light on the underlying mechanism of complex mega-thrust slip behaviour at subduction plate boundaries.

Together, Yoshihiro and Bryant are producing detailed images of the 3D structure and geometry of the Hikurangi mega-thrust region using a technique called seismic tomography.

Using the knowledge gained from these images, they are working to develop a dynamical model of crustal deformation that can reproduce the spectrum of fault slip behaviour at the Hikurangi subduction zone. Ultimately, this model will help New Zealanders better prepare for future massive earthquake events.

NeSI resources have been essential to their work, particularly the expertise of NeSI Computational Science Team members Alex Pletzer and Wolfgang Hayek.

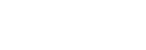

Alex and Wolfgang have been assisting Yoshihiro and Bryant in building the numerical tools required to simulate seismic wave propagation for the Hikurangi subduction zone. One such tool generates a mesh. A second tool, the community finite element code SPECFEM3D, reads the mesh and solves for the propagation of seismic waves.

Together these tools demand hundreds of thousands of computational core hours to calculate the fault slip spectrum. Because of the scope and impact of the research, it is essential that these tools be optimised to run in the most efficient manner on NeSI’s High Performance Computing (HPC) platform.

Execution speed depends on hardware, compiler, compiler options, and run time tuning. At NeSI, the HPC systems have Cray, GNU and Intel compilers installed, which allowed Alex and Wolfgang to pick and choose the compiler that produces the fastest code.

Previous optimisation work with Alex demonstrated that the choice of compiler can improve performance by as much as 30%.

In this latest project, Alex and Wolfgang demonstrated the benefit of vectorisation as well as running the mesh generation and finite element solver in hybrid MPI/OpenMP mode. Using hybrid parallelism in conjunction with the Cray compiler resulted in a factor 1.4x performance improvement over the previous best, pure MPI, GNU compiler based solution using the same amount of resources.

This solution means that Yoshihiro and Bryant will be able to reduce their core-hour consumption by 100,000, saving both operating and energy costs – a win-win for researchers and NeSI.

Interested in learning how NeSI’s Computational Science Team can help you? Or have an example of how NeSI platforms have supported your work? Get in touch by emailing support@nesi.org.nz.